The History of the CPU: The Evolution of Modern Processors

The First Giants

In the infancy of computing, everything was large. Engineers were just beginning to figure out how to use electronics to manipulate data, and the rewards of miniaturization were a long ways off. ENIAC, the first computer ever created, contained over 17,000 vacuum tubes. It consumed over 150 kilowatts of power and weighed as much as fifteen large cars. It was, in other words, monstrous.

In those days, the idea of a central processing unit did not exist as we know it today. The main reason for this was that the scope of computers were simply not large enough to define the functions of a central processing unit from the rest of the computer. ENIAC used punch cards to store data. There was no long term memory, video output, or sound output. ENIAC itself was something akin to a gigantic CPU being sitting in a room.

The First Modern Designs

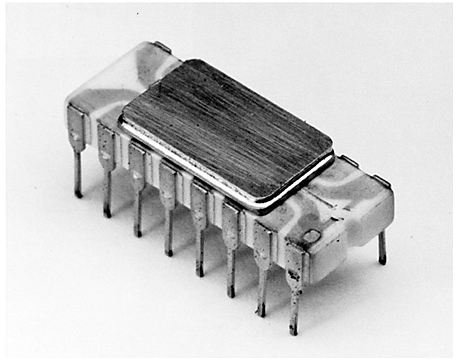

The first modern CPU was built under a name that everyone today recognizes - Intel. The Intel 4004 microprocessor, which was built at the request of calculator manufacturer Busicom, was remarkable because it was the first general-purpose CPU created which could be programed to perform various calculations based on data programmed into its on-board memory. Previously, microprocessors could only perform certain pre-defined tasks.

While the Intel 4004 is widely considered the first, various projects were also underway at the same time. Texas Instruments and General Instrument, two other calculator manufacturers, also created programmable microprocessors and debuted products based on them in the early 1970s. These products were further refined during the mid 1970s, resulting in the debut of more powerful early CPUs like the Intel 8008.

x86 Arrives

In 1978 Intel released the Intel 8086. It was released under some pressure, as competitors were already pushing out 16-bit design and some 32-bit designs were right around the corner. At the time Intel had no 16-bit processor.

In order to give itself an edge, Intel released the 8086 as compatible with programs written for its earlier 8-bit processors. This was the beginning of the x86 instruction set architecture. It impressed IBM, who decided to use the 8088 (a variant of the 8086) as the processor in the first IBM PC.

This wasn’t enough to get Intel out of the woods. In this area of history Intel was still just another player, one among many companies in the new business of making CPUs. Companies like National Semiconductor, Motorola, and even AT&T had their own processor designs which performed as well or better. Early Apples, for example, used a Motorola processor. This pressured Intel to continue making backwards compatible processors including the 32-bit 80186, 80286, and 80386 processors which arrived in the early 1980s. This backward compatibility formed the x86 instruction set architecture.

Through the late 1980s and the early 1990s Intel gradually became the processor of choice. The adoption of the x86 instruction set architecture started a snowball effect which eventually lead to all modern CPUs in personal computers being x86 based, as well as many processor in other devices. Having a set standard for programming CPUs made it easier for programmers to make software and for consumers to make informed choices.

Intel Challenged by AMD

The 1990s were largely a period where competition consisted of increasing clock speeds and larger cache sizes. During the late 1990s Intel was seriously challenged by AMD, and indeed AMD delivered some processors, like the K6, which performed better than Intel’s processors in many situations.

It was not until 2005, however, that processors began to change drastically from the formula of ever-higher clock speeds which reigned during the 1990s. In 2005 Intel and AMD both released dual-core processor designs. Intel released the Pentium D, and AMD released the Athlon X2.

Since 2005, CPU design has taken a rather abrupt and dramatic turn. Intel and AMD now both offer native quad-core CPU designs, and both have plans of offering six-core processor designs as early as mid-2010.

The Near Future

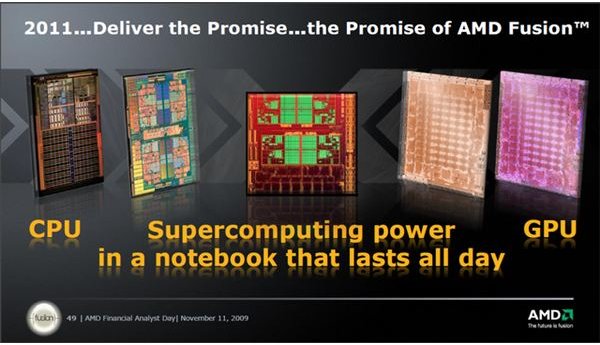

It is clear that the future of the CPU is parallel processing. After decades of existence as a single unit meant to perform a single task and then begin another, modern CPUs are departing significantly and instead focusing on performance under multi-threaded work loads. Increased integration with GPU design is also becoming a focus, and Intel, Nvida, and AMD are all working in that direction. As a result, the history of the CPU can be expected to further evolve in the next decade.