A Brief History of the Personal Computer

First and Second Generation Computers

The concepts of computer memory, storage, and processing have not changed much in the last 40 years. Certainly, these components have become faster, more efficient, and cheaper but the core concepts of computing have not changed. The first true microcomputers were expensive and difficult to program. However, these facts alone did not dictate the adoption of computers for tasks other than business. Adoption of microcomputers by individual users did not evolve until people began to see the benefits of home computers.

First and second-generation computers were the typical room or building-sized behemoths that arose out of the technologies of the 1940s and 1950s. The earliest of these computers were nothing more than calculators taking hours or days to do what a $5 calculator today can do in seconds. The third and fourth generation computers bear the most striking resemblance to modern computers. New technologies and new paradigms in implementing these technologies led to graphical user interfaces such as Microsoft Windows, the concept of hard drives rather than magnetic tape storage, and later, the concept of optical drives such as CDs and DVDs.

Third Generation Computers

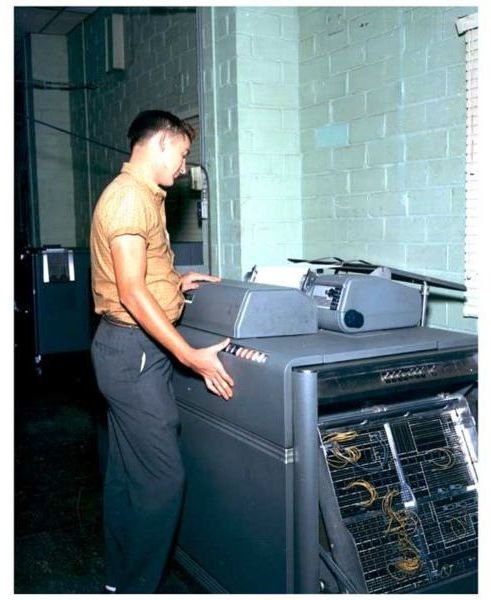

In the early 1960s, large computers were giving way to smaller, compact computing devices. This paradigm shift was introduced by the move from vacuum tubes to solid state devices. This alone made the computer capable of occupying much less space making the integration of computers into business much more practical. Solid state later gave way to printed circuit boards making computers even smaller.

At over $7,000 apiece, Data General’s Nova computer had a huge impact on the world of computing. This computer was one of the first 16-bit computers to implement the 8-bit word byte used today. Although technically a minicomputer, the Nova paved the way for home microcomputers during the fourth generation computing era.

In the early 1970s, home users got their first taste of home computing with Don Lancaster’s TV Typewriter. Not much more than its name implies, the TV Typewriter allowed users to store about 100 pages of text using a cassette tape. For the first time, users got to see their typed pages on an electronic device. Although not terribly practical, devices such as these planted the seeds in the minds of future users that computing was not just for large corporations and governments. Computing could be a useful tool in the home as well.

Fourth Generation Computers

Beginning in the early to mid 1970s, Intel made its first appearance with the introduction of the Intel 4004 processor. Smaller than any other processor to date, the 4004 was originally designed for a Japanese calculator company. Soon to follow was IBM’s invention of the Random Access Memory (RAM) chip. Coupled with Intel’s processor, computers were becoming smaller and smaller. Perhaps more importantly they were now within the price range of home users.

Intel’s subsequent processors such as the now-famous 8008 and 8080 processors gave rise to the 8086 and 8088 family of processors. Close on their heels was the 80386 chip which is the great, great grandfather of Intel’s newest processors today. Even before the days of the 80386 chip, some companies made headway into getting people to adopt computers for home use.

Based on Intel’s 8086/8088 family of processors, the Altair 8800 and the IMSAI 8080 made computing affordable and accessible for home users. However operating systems were still archaic requiring many hours to learn. This was not so much because they were difficult, but because the paradigm shift necessary to motivate individuals to learn to use home computers had not yet occurred. Many of these early computers were seen as hobbies, not a tool to get things done as with today’s computing paradigm.

Modern Computing

Developments over the next decade including graphics, more efficient memory, practical storage media such as floppy disks, and faster processors made home computing much more of a reality. Popular home computers of the 1980s such as the Atari 400/800 and the Commodore 64 showed home users that computing could be a practical as well an entertaining tool. By deploying a graphical user interface, Apple’s rise demonstrated that home computing could be easy and practical. Later and into the modern era, computers became granular meaning that parts were no longer soldered onto circuit boards; parts could be swapped in and out when they failed to function or to increase computing power as newer and cheaper parts became available. More recently, the dominance of Microsoft’s Windows family of operating systems have made learning computers even simpler with a list of features unheard of even a decade earlier.

Most recently, the concept of open source such as the variety of Linux operating systems has once again revolutionized the home computing industry. As Linux becomes simpler to use and as more software becomes available, the reality of an open source home operating system has become a possibility. Unlike commercial operating systems, there is no one voice marketing directly to consumers making adoption more certain. In the near future, however, Linux may be the operating system of choice for future computer generations.

Conclusion

Through the various generations, the home computer has not changed much since its first introduction. The most important factor in the adoption of computers in the home had as much to do with people’s minds as it did with the development of technologies themselves. First, home users had to be convinced that home computing was more than just a distraction or hobby reserved for nerds with the money and patience to invest in those electronic “toys.” The computers of the 1960s and 1970s paved the way for the adoption of the popular home computers of the 1980s. Companies such as Intel, Atari, and Commodore helped create a vision ensuring that home users saw computers as practical, entertaining, and worth the effort to learn.