Intel's Larrabee: Graphics Processing with CPUs

What is Larrabee?

Larrabee is a plan to use lots of cheap, heavily modified CPUs, to do the work of a graphics processor. When it was fist announced, it wasn’t clear as to whether it was going to be used as part of highly-parallel High Performance computing systems, consumer graphics cards, or both. Since then, it has become increasingly clear that Intel plans to use Larrabee to go into the desktop dedicated graphics market.

The argument behind this is convergence. CPUs are getting more parallel, like GPUs, and GPUs are getting more flexible and programmable, like CPUs. While convergence has pleasant connotations of things coming together, Intel and Nvidia have very divergent opinions of how this should happen.

A Quick Larrabee Overview

Larrabee’s cores are modified Pentiums - very modified. Out of Order Execution is removed to save space (allowing more cores on one chip) and costs. Out of Order Execution is a big help when working on the long instruction threads dealt with by a CPU, but graphics usually involve larger numbers of shorter threads.

The x86 instruction set is updated to -64, and extensions are added, again with graphics in mind. An SIMD vector unit is added to deal with the calculations common in computer graphics. Traditional GPUs also use many SIMDs in parallel, though they use more, simpler ones, while each Larrabee core has a single SIMD, but it is 16-vectors wide.

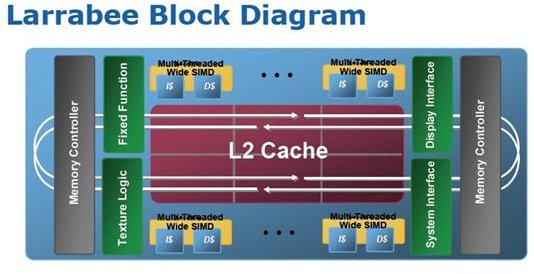

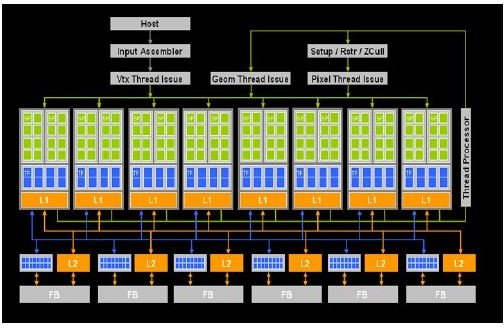

The similarities and differences are most obvious when looking at the block diagrams (below) of Larrabee (left) and Nvidia’s GeForce GPU (right). Considering that each Larrabee core has a 16-vector wide SIMD, while each Stream Processor (the green bits on the Nvidia) is single-wide, but are in groups of sixteen, we end up with something similar . Especially if you note that the GeForce’s L2 cache and frame buffer are shared like the Intel’s, which isn’t clear in the diagrams.

One difference is that each core on the GPU has a few of its own texture units (the blue bits) as well as larger shared bunch (along the bottom), while Larrabee only has the one block of them. Having to go further to access texture units shouldn’t be that big a deal because of another difference. Larrabee uses a ring-bus, 512 bits wide in each direction, to connect the hardware components and cores (white line).

There is some advantage to having the cores connected to one another directly. A traditional GPU core has to send data that needs further processing through the Thread Processor, as seen above (green line). There is a cost to the ring-bus hooking up everything, however, it takes up room on the chip and draws power. That’s room and power that could have been used for more cores.

Software Rasterization

Another difference, which is harder to make out from the diagrams, is that while Larrabee does have a texture block, there is no raster operation (ROP) hardware. Larrabee will do this in software. That makes many cringe. If there is already effective specialized hardware to do something, doing it in software and sapping resources from the system in general is usually frowned upon.

After all, the whole point of a GPU is that it can process graphics more effectively than a CPU running software to do it, that’s why they are in computers in the first place. In a sense, Larrabee is a big step backward in how things are done. Of course, we won’t really know about how this affects overall performance with any certainty for a while. Which gets us to the obvious question:

Up Next: Will It Be any Good?

Now that we know a little bit about the Larrabee architecture, and that Intel is planning to use it for a consumer GPU, the next question is how will it perform? Again, considering that it probably won’t be around until next year, there is no definitive answer. But we have put together the more reliable rumours and observations in an article on what to expect from Larrabee performance.